Zero Density Reality Virtual Studio Solution

Zero Density is an international technology company dedicated to developing creative products. Zero Density offers the next level of virtual production with real-time visual effects.

Reality Virtual Studio and Augmented Reality Solution

Reality from Zero Density is the ultimate real-time node-based compositor enabling real-time visual effects pipelines featuring video I/O, keying, compositing and rendering in one single soft-ware in real-time. As the most photo-realistic virtual studio production solution, Reality provides its client the tools to create the most immersive content possible and revolutionize story-telling in broadcasting, media or cinema industry.

Photo-realism

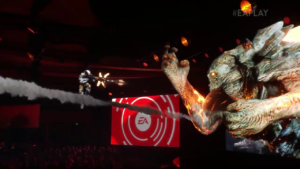

Reality uses Unreal Engine by Epic Games, the most photo-realistic real-time game engine, as the 3D renderer. With the advanced real-time visual effects capabilities, Reality ensures the most photo-realistic composite output possible.

Compositing in 3D space

As a unique approach in the market, in Reality, green screen image is composited with graphics in 3D scene. This technique results in real-time realistic reflections and refractions of the physical objects and the people inside the green screen on top of the graphics. Also blooming effects and lens flares are composited over the real elements. All of the compositing is made in 16 bit floating point, for HDR precision.

Reality Keyer™

Reality Keyer™ provides spectacular results for keying of contact shadows, transparent objects and sub-pixel details like hair. Reality Keyer can work also with 4:4:4 RGB camera sources. The keyer also supports 3D masks for “force-fill” and “spill bypass” buffers besides garbage mask. Those masks enable hybrid virtual studio oper-ation which lets you combine virtual and real environments. Besides the quality of its results, Reality Keyer™ is still very easy to setup and operate.

16 Bit Floating Point Compositing

As a unique approach in the industry, Zero Density’s Reality Engine composites images by using 16 bit floating point arithmetics. Having the control of full compositing pipeline, all gamma and color space conversions are handled correctly. By using this technology, the real world and the virtual elements are blended perfectly. Although Reality Engine is able to provide fill and key outputs for external devices (such as vision mixers) like conventional solutions, compositing in Reality Engine is recommended.

Advanced Augmented Reality

Having the tracked camera feed as an input image, Reality Engine is able to render reflections and refractions of the real environment onto the virtual objects. Real-time 3D reflections and the shadows of the virtual objects are also composited over the incoming camera feed. Blooms and lend flares caused by the bright virtual pixels are also blended to the final composite.

Reality Engine

Reality Engine is a real-time node-based compositor which enables post-production style visual effects pipelines in live video production domain.

Reality Engine uses NVidia Quadro GPUDi-rect technology for high performance video I/O in order to streamline real-time 4K UltraHD workflows.

Tracking and Lens Calibration

Reality supports industry standard tracking protocols and devices. Reality is able to use the lens calibration data sent by the tracking devices.

Reality can also provide its own lens calibration data for accurate lens matching. Reality Engine uses focus distance calibration data to match virtual graphics with tracked lens focus at every frame. This feature gives the focus control of the virtual graphics to the cameraman.

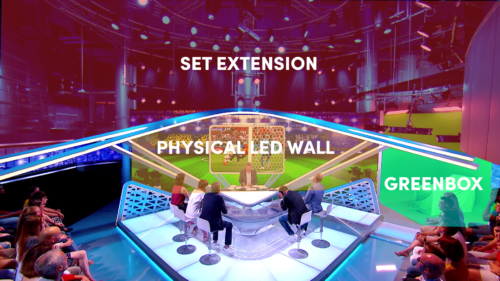

Video Walls and Portal Window

Reality can feed your videowalls with high-resolution graphics. By using camera tracking data, you can even convert your videowall into a portal to a virtual environment. Combining portal window with ceiling extension, you will be able to have a virtual studio without green-screen.

Reality Broadcast Workflow Tools

Reality provides necessary control tools for multicam studio operations. Reality Control Suite has plugins to drive Reality Engine with external data for data-driven workflows.

Video